Generative artificial intelligence has revolutionized how organizations approach content creation, data analysis, and automated decision-making. However, as enterprises increasingly adopt generative AI solutions, they encounter significant financial challenges that can quickly escalate beyond initial projections. Understanding and managing these costs effectively has become crucial for sustainable AI implementation and business success.

The complexity of generative AI cost management stems from multiple interconnected factors including computational resource requirements, data processing expenses, model training costs, and ongoing operational overhead. Organizations must navigate these challenges while ensuring their AI investments deliver measurable value and maintain competitive advantages in their respective markets.

This comprehensive guide examines the key challenges faced by genai models and provides actionable strategies for optimizing AI-related expenditures. By understanding the primary cost drivers and implementing effective management practices, businesses can harness the power of generative AI while maintaining financial control and operational efficiency.

What is Generative AI Cost Management?

Generative AI cost management encompasses the systematic approach to monitoring, analyzing, and optimizing expenses associated with deploying and maintaining artificial intelligence systems that create new content, data, or insights. Unlike traditional software applications, generative ai solutions require substantial computational resources, specialized infrastructure, and ongoing operational support that can significantly impact organizational budgets.

The scope of generative AI cost management extends beyond simple infrastructure expenses to include data acquisition and preparation, model development and training, inference operations, compliance requirements, and governance frameworks. Organizations must consider both direct costs such as cloud computing resources and indirect costs including personnel training, security measures, and regulatory compliance.

Effective cost management in this context requires understanding the unique characteristics of pre trained multi task generative ai models and their resource consumption patterns. These models often demand high-performance computing environments, extensive data storage capabilities, and continuous monitoring systems that contribute to overall operational expenses.

Major Cost Drivers in Generative AI Projects

Computational Infrastructure Costs

The most significant expense category for most generative AI implementations involves computational infrastructure, particularly GPU and specialized processor requirements. Challenges of generative ai in this area stem from the intensive processing demands of large language models, image generation systems, and multi-modal AI applications that require substantial parallel computing capabilities.

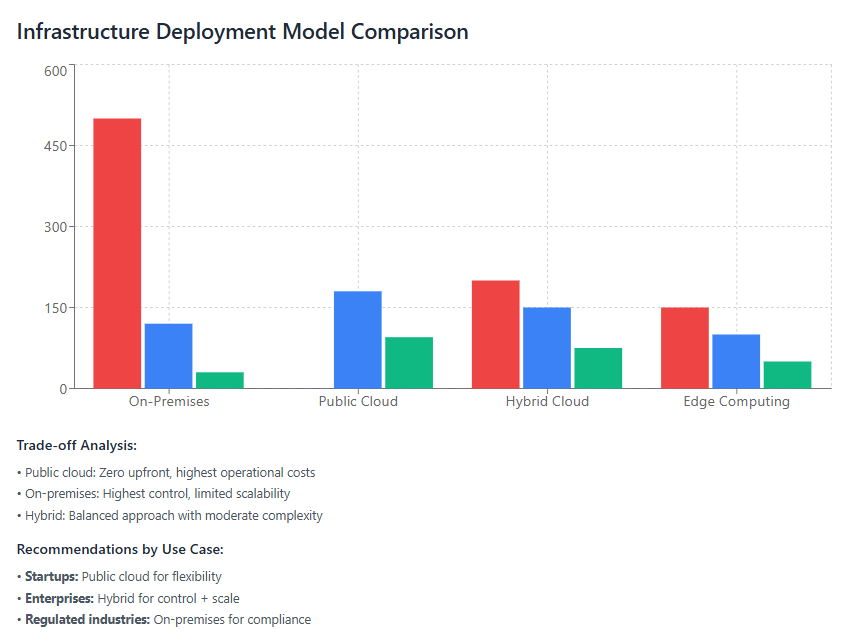

Organizations typically encounter costs ranging from thousands to millions of dollars annually depending on their AI workload requirements and chosen infrastructure approach. Cloud-based solutions offer flexibility but can become expensive with sustained high-usage patterns, while on-premises deployments require significant upfront capital investments but may provide better long-term cost control.

The volatility of GPU market pricing and availability further complicates infrastructure cost planning. Organizations must balance performance requirements with budget constraints while ensuring adequate capacity for peak usage periods and future growth requirements.

Data Storage and Management Expenses

Generative AI models require massive datasets for training and ongoing operations, creating substantial storage and data management costs. Organizations must maintain multiple data copies for different environments, implement robust backup systems, and ensure high-speed access to training datasets during model development phases.

Data storage costs compound when organizations work with multimodal datasets including text, images, audio, and video content. These diverse data types require different storage strategies and access patterns, leading to complex storage architectures with varying cost structures and performance characteristics.

Additionally, data privacy and security requirements often mandate specialized storage solutions with enhanced encryption, access controls, and audit capabilities, further increasing overall storage expenses and operational complexity. Understanding cloud storage optimization becomes crucial for managing these expanding data requirements efficiently.

Personnel and Expertise Investments

The specialized nature of generative AI technology requires significant investments in skilled personnel including AI researchers, machine learning engineers, data scientists, and infrastructure specialists. The competitive market for AI talent drives compensation levels significantly above traditional IT roles, creating substantial ongoing operational expenses.

Organizations must also invest in continuous training and development programs to keep their teams current with rapidly evolving AI technologies, frameworks, and best practices. This includes conference attendance, certification programs, and ongoing education initiatives that contribute to overall project costs.

The scarcity of experienced generative AI professionals often forces organizations to rely on external consultants and contractors, adding additional expense layers while potentially creating knowledge transfer challenges and long-term dependency risks.

Data Preparation and Training Cost Management

Data Acquisition and Preprocessing Expenses

What challenges does generative ai face in terms of data preparation often center around the enormous volumes of high-quality data required for effective model training. Organizations must acquire, clean, and preprocess datasets that can include billions of text tokens, millions of images, or extensive audio recordings depending on their specific AI applications.

Data acquisition costs vary significantly based on source types and quality requirements. Publicly available datasets may be free but often require extensive cleaning and validation, while premium commercial datasets provide higher quality but at substantial licensing costs. Organizations must balance data quality requirements against budget constraints while ensuring compliance with licensing terms and usage restrictions.

The preprocessing phase involves significant computational resources for data cleaning, normalization, augmentation, and format conversion activities. These operations often require specialized software tools, high-performance computing resources, and substantial time investments from skilled data professionals.

Training Infrastructure Optimization

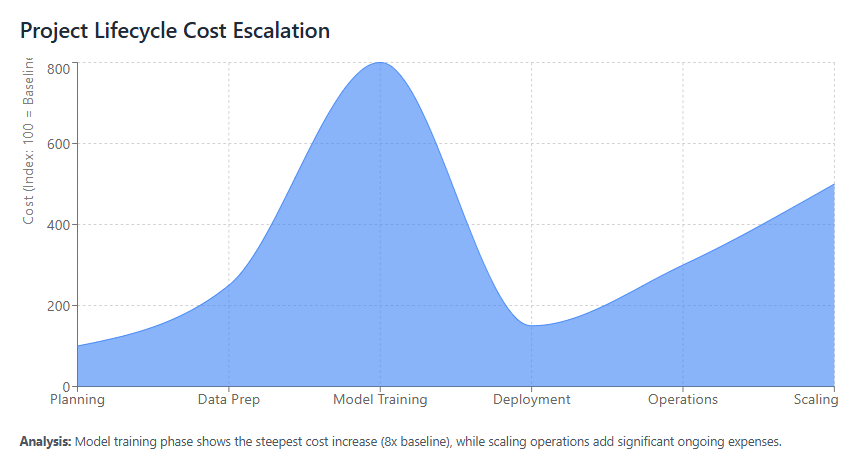

Model training represents one of the most expensive phases of generative AI implementation, often requiring weeks or months of continuous high-performance computing resources. Organizations must carefully plan training schedules, resource allocation, and cost optimization strategies to manage these substantial expenses effectively.

Distributed training approaches can reduce overall training time but require sophisticated orchestration systems and high-bandwidth networking infrastructure. The complexity of managing distributed training environments adds operational overhead while potentially providing cost benefits through reduced time-to-deployment.

Checkpoint management and training resumption capabilities become crucial for cost control, allowing organizations to recover from infrastructure failures without losing substantial training progress and associated computational investments.

Transfer Learning and Model Reuse Strategies

Leveraging pre trained multi task generative ai models provides significant cost advantages by reducing training time, computational requirements, and data needs for specific use cases. Organizations can adapt existing models through fine-tuning processes that require substantially fewer resources than training from scratch.

Transfer learning strategies must balance customization needs against additional training costs and time requirements. Organizations should evaluate whether generic pre-trained models meet their requirements or if specialized training justifies the additional expense and complexity.

Model versioning and reuse frameworks help organizations maximize their training investments by enabling multiple applications to leverage shared model components and training efforts across different use cases and departments.

Model Selection Impact on Operational Costs

Performance vs. Cost Trade-offs

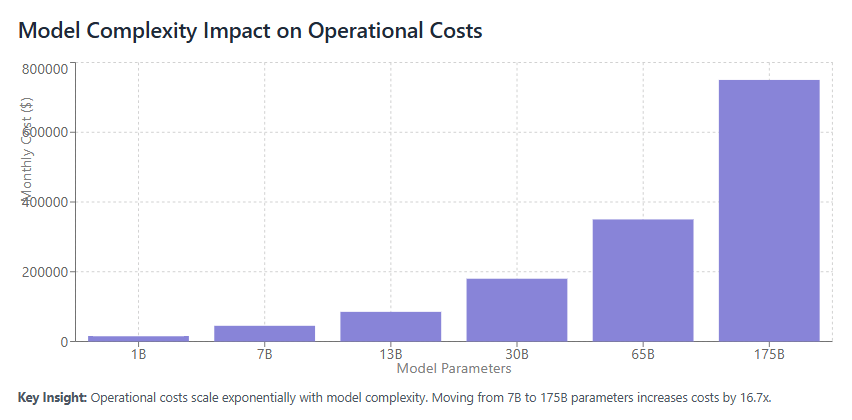

The choice between different model architectures, sizes, and capabilities significantly impacts both initial deployment costs and ongoing operational expenses. Larger models generally provide superior performance but require more computational resources, storage capacity, and operational support throughout their lifecycle.

Organizations must evaluate performance requirements against budget constraints while considering factors such as response time expectations, accuracy requirements, and scalability needs. These trade-offs often involve complex decision-making processes that balance technical capabilities with financial constraints.

Model efficiency optimization through techniques such as pruning, quantization, and distillation can help reduce operational costs while maintaining acceptable performance levels. However, these optimization processes require additional expertise and development time that must be factored into overall project costs.

Multi-Model Management Strategies

Many organizations deploy multiple generative AI models for different use cases, creating complex management challenges and cost optimization opportunities. Effective multi-model strategies can reduce overall costs through resource sharing, common infrastructure utilization, and operational efficiency improvements.

Container orchestration and model serving platforms help organizations optimize resource utilization across multiple models while providing scalability and management benefits. These platforms require initial setup investments but can provide substantial long-term cost savings through improved resource efficiency.

Model lifecycle management becomes crucial for controlling costs as organizations must decide when to retire older models, upgrade to newer versions, or maintain parallel deployments for different applications and user groups.

Scaling Challenges and Cost Implications

Demand Forecasting and Capacity Planning

Key challenges faced by genai models during scaling include unpredictable demand patterns and the need for substantial infrastructure investments to handle peak usage periods. Organizations must balance capacity planning with cost control while ensuring acceptable performance during high-demand periods.

Auto-scaling capabilities help optimize costs by automatically adjusting resources based on actual demand patterns, but these systems require sophisticated monitoring and configuration to operate effectively. Poorly configured auto-scaling can lead to either inadequate capacity during peak periods or excessive costs during low-usage periods.

Demand forecasting becomes particularly challenging for generative AI applications due to the often unpredictable nature of user interactions and the potential for viral adoption of AI-powered features and services. Organizations can benefit from cloud cost monitoring tools that provide real-time visibility into resource utilization patterns.

Geographic Distribution and Edge Computing

Global deployments of generative AI services require careful consideration of infrastructure placement, data residency requirements, and network latency constraints. These factors significantly impact both deployment costs and ongoing operational expenses across different geographic regions.

Edge computing deployments can reduce latency and improve user experience but require distributed infrastructure management and potentially higher per-unit costs due to smaller deployment scales and specialized hardware requirements.

Organizations must balance performance requirements with cost considerations while ensuring compliance with local regulations and data sovereignty requirements that may mandate specific deployment architectures and infrastructure locations.

Performance Monitoring and Optimization

Continuous performance monitoring systems are essential for maintaining cost control during scaling operations, but these systems themselves require significant infrastructure investments and operational support. Organizations must implement comprehensive monitoring solutions that provide visibility into resource utilization, cost trends, and performance metrics.

Real-time cost tracking and alerting systems help prevent budget overruns by providing early warnings when usage patterns exceed expected parameters or when infrastructure costs escalate beyond predetermined thresholds.

Performance optimization requires ongoing analysis and tuning efforts that involve skilled personnel and specialized tools, adding to overall operational costs but potentially providing substantial long-term savings through improved efficiency.

Governance and Compliance Costs

Regulatory Compliance Requirements

The regulatory landscape for artificial intelligence continues to evolve rapidly, creating ongoing compliance costs and operational complexity for organizations deploying generative AI solutions. Compliance requirements often mandate specific documentation, audit trails, and operational procedures that add substantial overhead to AI projects.

Data protection regulations such as GDPR, CCPA, and emerging AI-specific legislation require specialized compliance frameworks, legal review processes, and ongoing monitoring systems that contribute to overall project costs. Organizations must invest in compliance infrastructure and expertise to ensure adherence to applicable regulations.

Industry-specific regulations may impose additional requirements for AI deployments in sectors such as healthcare, finance, and government, creating specialized compliance costs and operational constraints that must be factored into project planning and budgeting.

Security and Risk Management

Generative AI systems present unique security challenges that require specialized protection measures, monitoring systems, and incident response capabilities. Security investments include both technology solutions and skilled security professionals with AI-specific expertise.

Risk management frameworks must address potential issues such as model poisoning, adversarial attacks, data leakage, and unintended content generation. These frameworks require ongoing monitoring, testing, and improvement efforts that contribute to operational costs.

Insurance and liability considerations for AI deployments may require specialized coverage and risk assessment processes that add to overall project costs while providing essential protection against potential issues and legal challenges.

Audit and Documentation Requirements

Maintaining comprehensive documentation and audit trails for generative AI systems requires significant ongoing effort and specialized systems. Organizations must document training data sources, model development processes, deployment procedures, and operational changes to support compliance and risk management requirements.

Audit requirements often mandate third-party assessments and certifications that involve substantial costs for external expertise, testing processes, and ongoing compliance validation. These requirements can significantly impact project budgets and timelines.

Version control and change management systems for AI models require sophisticated tracking capabilities that go beyond traditional software development practices, adding complexity and cost to deployment and operational processes.

Key Challenges Faced by GenAI Models

Resource Optimization Challenges

Challenges of generative ai in resource optimization stem from the dynamic nature of AI workloads and the difficulty of predicting resource requirements across different use cases and usage patterns. Organizations struggle to optimize resource allocation while maintaining performance standards and cost control.

Memory management becomes particularly challenging for large language models that require substantial RAM and specialized memory architectures. Organizations must balance memory requirements with cost considerations while ensuring adequate performance for their specific applications.

Storage optimization requires careful consideration of data access patterns, performance requirements, and cost structures across different storage tiers and technologies. The complexity of optimizing storage for AI workloads often exceeds traditional application optimization approaches.

Integration and Interoperability Issues

Integrating generative AI models with existing enterprise systems creates substantial technical and cost challenges. Organizations must invest in integration platforms, API management systems, and custom development efforts to connect AI capabilities with their existing technology stacks.

Data integration challenges require specialized tools and expertise to ensure seamless data flow between AI models and enterprise systems while maintaining data quality, security, and performance requirements.

Legacy system compatibility issues may require substantial modernization investments or complex integration solutions that add significant costs to AI deployment projects while potentially limiting functionality and performance.

Quality Assurance and Testing

Testing generative AI systems requires specialized approaches that go beyond traditional software testing methodologies. Organizations must invest in comprehensive testing frameworks that address accuracy, bias, safety, and performance across diverse use cases and scenarios.

Quality assurance processes for AI models require ongoing monitoring and validation efforts that involve both automated systems and human review processes. These requirements create substantial operational overhead and specialized personnel needs.

Bias detection and mitigation requires sophisticated testing approaches and ongoing monitoring systems that add complexity and cost to AI deployment projects while being essential for responsible AI deployment.

Strategic Solutions for Cost Optimization

Cloud Resource Management

Effective cloud resource management provides substantial opportunities for cost optimization in generative AI deployments. Organizations should implement comprehensive resource monitoring, automated scaling policies, and cost allocation frameworks that provide visibility and control over AI-related expenses.

Reserved instance strategies and committed use agreements with cloud providers can provide significant cost savings for predictable AI workloads while maintaining flexibility for variable demand patterns. Organizations must carefully analyze their usage patterns to optimize these purchasing strategies.

Multi-cloud strategies can help optimize costs by leveraging competitive pricing and specialized capabilities across different providers while requiring sophisticated management frameworks and expertise to implement effectively. Consider exploring multi-cloud management solutions to streamline these complex deployments.

Model Efficiency Improvements

Implementing model optimization techniques such as pruning, quantization, and distillation can substantially reduce operational costs while maintaining acceptable performance levels. These techniques require specialized expertise but can provide significant long-term savings through reduced resource requirements.

Model compression strategies help reduce storage, memory, and computational requirements while potentially improving inference speed and reducing operational costs. Organizations must balance compression benefits against potential performance impacts for their specific use cases.

Efficient model serving architectures can optimize resource utilization through techniques such as model batching, caching, and specialized hardware utilization that reduce per-inference costs while maintaining performance standards.

Operational Excellence Practices

Implementing comprehensive monitoring and alerting systems helps organizations maintain cost control through early detection of usage anomalies, performance issues, and cost escalation patterns. These systems require initial investments but provide substantial long-term benefits through improved operational visibility.

Automated cost optimization policies can help reduce expenses through intelligent resource scheduling, unused resource cleanup, and dynamic scaling based on actual demand patterns. These automation systems require careful configuration and ongoing maintenance but can provide significant cost savings.

Regular cost reviews and optimization assessments help organizations identify improvement opportunities and ensure their AI investments continue to provide optimal value. These reviews should include technical, operational, and business perspectives to ensure comprehensive optimization.

Best Practices for Sustainable AI Operations

Financial Planning and Budgeting

Effective financial planning for generative AI projects requires comprehensive understanding of both direct and indirect costs throughout the entire project lifecycle. Organizations should develop detailed cost models that account for infrastructure, personnel, data, compliance, and operational expenses.

Budget allocation strategies should include contingency planning for cost escalation scenarios and provision for ongoing optimization efforts that may require additional investments to achieve long-term savings. Regular budget reviews help ensure projects remain financially viable and aligned with organizational objectives.

Cost tracking and reporting systems should provide real-time visibility into AI-related expenses across different categories and projects, enabling proactive management and optimization decisions based on actual usage patterns and performance metrics.

Vendor Management and Procurement

Strategic vendor relationships can provide substantial cost advantages through volume discounts, preferred pricing, and access to specialized expertise and technologies. Organizations should develop comprehensive vendor management strategies that balance cost optimization with performance and reliability requirements.

Contract negotiation strategies should address specific AI workload requirements including performance guarantees, scalability provisions, and cost optimization mechanisms such as committed use discounts and flexible pricing models.

Multi-vendor strategies can help optimize costs and reduce dependency risks while requiring sophisticated management approaches to coordinate across different providers and maintain operational consistency.

Team Development and Knowledge Management

Investing in internal AI expertise provides long-term cost benefits by reducing dependency on external consultants and enabling more effective cost optimization decisions. Organizations should develop comprehensive training programs and career development paths for AI-related roles.

Knowledge management systems help organizations capture and share AI-related expertise across teams and projects, reducing duplication of effort and improving overall efficiency. These systems should include technical documentation, best practices, and lessons learned from previous projects.

Cross-functional collaboration between AI teams, finance departments, and business stakeholders helps ensure cost optimization efforts align with business objectives while maintaining technical performance and operational requirements.

Future Trends in AI Cost Management

Emerging Technologies and Cost Implications

Advances in AI hardware including specialized processors, neuromorphic computing, and quantum computing may provide substantial cost advantages for specific AI workloads while requiring new expertise and infrastructure investments.

Edge AI deployment strategies continue to evolve with improved hardware capabilities and reduced costs, potentially providing cost advantages for specific use cases while requiring distributed management approaches and specialized optimization techniques.

Automated AI operations (AIOps) technologies promise to reduce operational costs through intelligent automation of routine tasks, predictive maintenance, and optimization recommendations based on historical patterns and performance data.

Industry Standards and Best Practices

Emerging industry standards for AI cost management and optimization will help organizations benchmark their performance and adopt proven cost optimization strategies. These standards will likely address areas such as resource utilization metrics, cost allocation methodologies, and optimization best practices.

Open source tools and frameworks for AI cost management continue to mature, providing organizations with sophisticated cost optimization capabilities without the need for substantial commercial software investments.

Industry collaboration and knowledge sharing initiatives help organizations learn from collective experiences and avoid common cost optimization pitfalls while implementing proven strategies and best practices.

Conclusion

Generative AI cost management represents a critical capability for organizations seeking to harness the transformative potential of artificial intelligence while maintaining financial control and operational efficiency. The challenges of generative ai in terms of cost management are substantial and multifaceted, requiring comprehensive strategies that address infrastructure, operations, governance, and strategic considerations.

Success in managing generative AI costs requires a holistic approach that combines technical expertise, financial discipline, and strategic thinking. Organizations must develop sophisticated understanding of their AI workload characteristics, implement comprehensive monitoring and optimization systems, and maintain ongoing focus on cost optimization throughout the entire AI lifecycle.

The key challenges faced by genai models continue to evolve as technology advances and organizational adoption increases. However, organizations that invest in comprehensive cost management capabilities and maintain focus on optimization will be better positioned to realize the full potential of generative AI investments while maintaining competitive advantages and financial sustainability.

Through careful planning, strategic implementation, and ongoing optimization efforts, organizations can successfully navigate the complex landscape of generative AI cost management while achieving their AI transformation objectives. The investment in proper cost management practices provides substantial returns through improved operational efficiency, reduced financial risk, and enhanced ability to scale AI initiatives across the enterprise.

As the generative AI landscape continues to mature, organizations that establish strong cost management foundations will be better positioned to adapt to new technologies, optimize their investments, and maintain competitive advantages in their respective markets through effective and efficient AI deployment strategies.

For organizations looking to implement comprehensive cost management for their AI initiatives, exploring specialized cloud cost optimization platforms can provide the tools and insights needed to maintain financial control while scaling AI operations effectively.