Large Language Model (LLM) APIs have fundamentally transformed how businesses integrate artificial intelligence into their applications and workflows. As organizations increasingly rely on cloud-based AI services, understanding the complex pricing landscape of LLM APIs becomes crucial for making cost-effective decisions and optimizing technology investments.

The rapid adoption of LLM APIs mirrors the broader trend toward cloud computing and SaaS solutions, where businesses seek scalable, on-demand access to powerful AI capabilities without the overhead of maintaining their own infrastructure. However, this convenience comes with the challenge of managing potentially unpredictable costs that can scale rapidly with usage.

This comprehensive guide provides an in-depth analysis of LLM API pricing across major providers in 2025, offering practical strategies for cost optimization and effective spend management. Whether you’re a startup exploring affordable AI solutions or an enterprise looking to optimize existing AI infrastructure costs, this guide will help you navigate the complex world of LLM API pricing.

By understanding pricing models, comparing providers, and implementing robust cost management practices, organizations can harness the power of artificial intelligence while maintaining predictable and sustainable technology budgets.

What are LLM APIs?

Large Language Model APIs are cloud-based services that provide programmatic access to advanced AI language models through standardized interfaces. These APIs allow developers and businesses to integrate sophisticated natural language processing capabilities into their applications without the need to develop, train, or host their own models.

Unlike traditional software licensing models, LLM APIs operate on usage-based pricing structures where costs are directly tied to consumption metrics such as tokens processed, requests made, or compute time utilized. This approach aligns with modern cloud cost optimization principles where organizations pay only for what they use.

How LLM APIs Differ from Traditional Software

LLM APIs represent a significant shift from conventional software deployment models. While traditional applications are installed and maintained on-premises or through standard SaaS subscriptions, LLM APIs are consumed as services with variable pricing based on actual usage patterns.

This consumption-based model offers several advantages including reduced upfront investment, automatic scaling, and access to cutting-edge AI models that would be prohibitively expensive to develop independently. However, it also introduces new challenges in cost prediction and budget management that require specialized SaaS spend management approaches.

Understanding LLM API Pricing Models

The pricing structure for LLM APIs varies significantly across providers, but most follow several common models that organizations need to understand for effective cost management and budgeting.

Token-Based Pricing

The most prevalent pricing model in the LLM API space is token-based billing, where costs are calculated based on the number of tokens processed in both input (prompt) and output (completion) text. Tokens typically represent word fragments, with approximately 1,000 tokens equaling about 750 words in English.

This model provides granular cost control and directly correlates expenses with actual usage. However, it requires careful monitoring of token consumption patterns and optimization of prompt engineering to minimize unnecessary token usage while maintaining output quality.

Request-Based Pricing

Some providers offer request-based pricing where costs are determined by the number of API calls made, regardless of the length of input or output text. This model can be more predictable for applications with consistent request patterns but may become expensive for use cases requiring long-form content generation.

Request-based pricing often includes tier-based discounts for high-volume usage, making it attractive for enterprises with predictable, large-scale AI integration needs. Organizations should evaluate their expected usage patterns when choosing between token-based and request-based models.

Subscription Tiers

Many LLM API providers offer subscription-based pricing tiers that combine base fees with usage allowances or discounted rates. These tiers often provide cost predictability similar to traditional SaaS models while maintaining the flexibility of usage-based billing for overages.

Enterprise subscription tiers frequently include additional benefits such as priority support, enhanced security features, custom model fine-tuning capabilities, and guaranteed availability SLAs that justify higher base costs for mission-critical applications.

Compute-Time Billing

For specialized use cases requiring custom model inference or fine-tuning, some providers offer compute-time billing where costs are based on the actual processing time required for specific operations. This model is typically used for advanced features like custom model training or specialized inference configurations.

Major LLM API Providers Pricing Comparison

The LLM API market in 2025 features several major providers, each with distinct pricing structures and value propositions that organizations must carefully evaluate based on their specific requirements and usage patterns.

OpenAI API Pricing

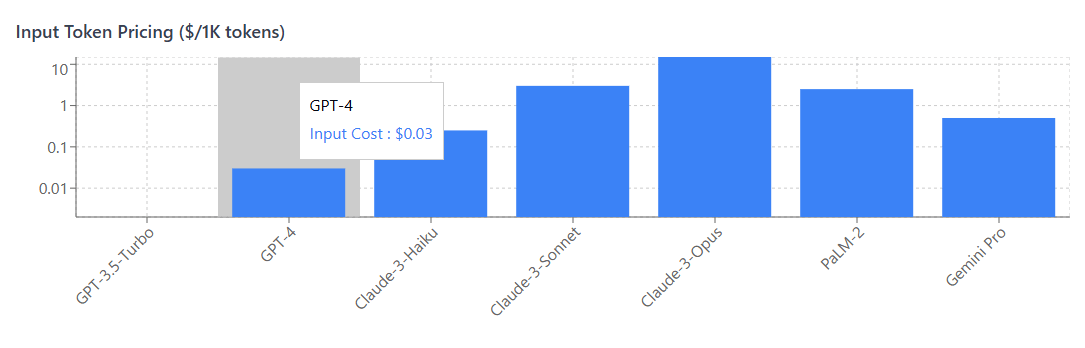

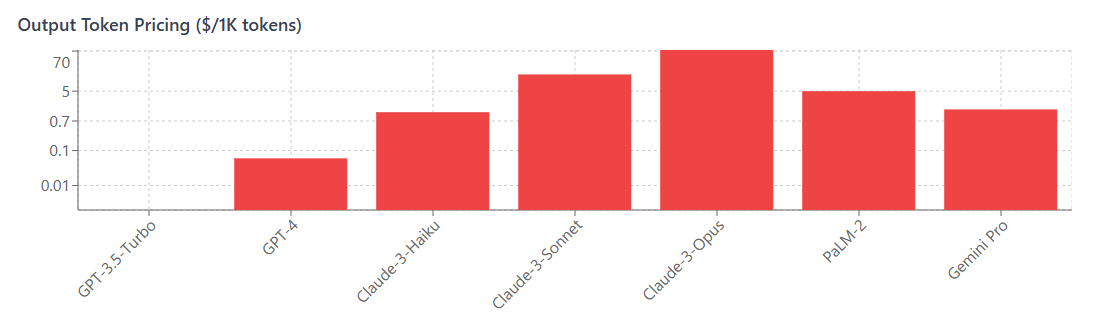

OpenAI remains a leading provider with its GPT family of models, offering tiered pricing based on model capabilities and token consumption. GPT-4 models typically cost significantly more than GPT-3.5 variants, with pricing ranging from $0.002 per 1K tokens for GPT-3.5-turbo to $0.03 per 1K input tokens for GPT-4.

The company’s pricing strategy emphasizes pay-per-use flexibility while offering volume discounts for enterprise customers. OpenAI also provides specialized pricing for fine-tuning services and custom model deployments, which can represent substantial cost savings for organizations with specific use case requirements.

Anthropic Claude API Pricing

Anthropic’s Claude API features competitive token-based pricing with emphasis on safety and reliability. Claude-3 models are priced competitively with OpenAI’s offerings, typically ranging from $0.25 per million input tokens for Claude-3-Haiku to $15 per million input tokens for Claude-3-Opus.

Anthropic’s pricing model includes generous context windows at standard rates, making it cost-effective for applications requiring extensive context understanding. The company also offers enterprise agreements with custom pricing for high-volume usage and specialized deployment requirements.

Google Cloud AI APIs

Google’s Vertex AI platform provides access to PaLM and Gemini models with integration into the broader Google Cloud ecosystem. Pricing varies by model complexity and deployment region, with PaLM-2 typically priced at $0.0025 per 1K characters for input and $0.005 per 1K characters for output.

The platform’s integration with existing Google Cloud services can provide cost efficiencies for organizations already using Google’s cloud infrastructure, as well as opportunities for affordable cloud solutions through combined service discounts.

Microsoft Azure OpenAI Service

Microsoft’s Azure OpenAI Service provides enterprise-grade access to OpenAI models with enhanced security and compliance features. Pricing follows OpenAI’s token-based structure with additional Azure-specific tiers and enterprise discount programs.

The service integrates seamlessly with Microsoft’s broader productivity and cloud ecosystem, offering potential cost synergies for organizations heavily invested in Microsoft technologies. Enterprise agreements can provide significant discounts for committed usage volumes.

Amazon Bedrock

Amazon’s Bedrock platform offers access to multiple LLM providers through a unified interface, with pricing varying by the underlying model provider. This approach allows organizations to optimize costs by selecting the most appropriate model for specific use cases while maintaining consistent integration patterns.

Bedrock’s pricing includes Amazon’s traditional commitment-based discounts and integration with AWS cost management tools, making it attractive for organizations seeking scalable cloud computing solutions with predictable pricing.

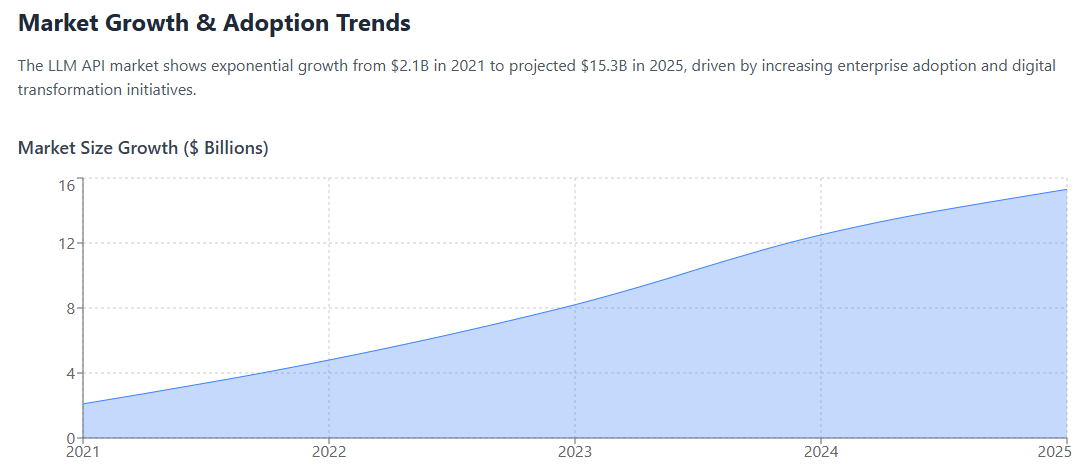

Current State of the LLM API Market

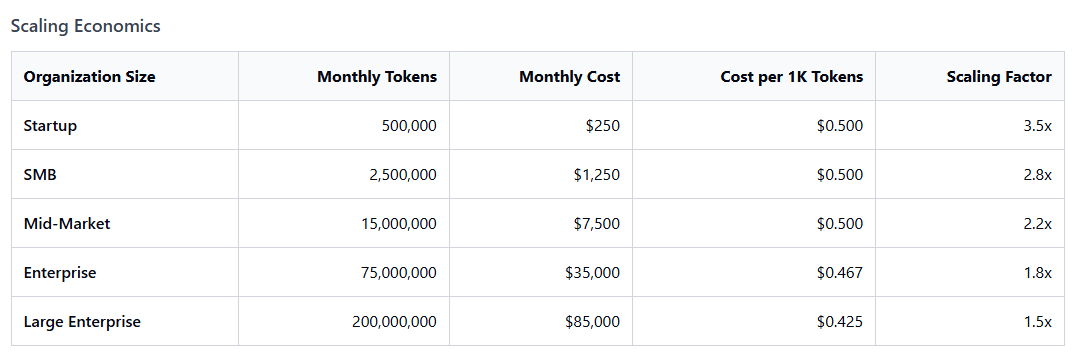

The LLM API market in 2025 demonstrates remarkable growth and increasing competitive pressure, driving innovation in both capability and pricing structures. Industry analysis suggests the market has experienced over 150% year-over-year growth, with total market size approaching $15 billion globally.

This explosive growth mirrors broader trends in cloud computing adoption and digital transformation initiatives, where organizations increasingly view AI capabilities as essential rather than optional. The democratization of access to powerful language models through APIs has enabled businesses of all sizes to integrate sophisticated AI features into their products and operations.

Market Dynamics and Competition

Intense competition among providers has led to aggressive pricing strategies and continuous improvement in model capabilities. This competitive environment benefits customers through lower prices, improved performance, and enhanced features, though it also creates challenges in vendor selection and cost management.

The market exhibits characteristics similar to other mature cloud services, with price wars driving down basic functionality costs while premium features and enterprise services command higher margins. Organizations benefit from this competition through improved value propositions and more flexible pricing options.

Enterprise Adoption Patterns

Enterprise adoption of LLM APIs has accelerated significantly, with surveys indicating that over 70% of large organizations are either actively using or piloting LLM integrations. This widespread adoption creates economies of scale that further drive down costs while increasing demand for enterprise-grade features and support.

The shift toward enterprise adoption has also influenced pricing models, with providers increasingly offering commitment-based discounts, custom SLAs, and specialized security features that justify premium pricing tiers for mission-critical applications.

Hidden Costs in LLM API Usage

While LLM API pricing appears straightforward on the surface, organizations often encounter unexpected costs that can significantly impact budgets if not properly anticipated and managed through effective cloud cost optimization strategies.

Data Transfer and Storage Costs

Many organizations overlook data transfer costs associated with LLM API usage, particularly for applications processing large volumes of text or requiring frequent model interactions. These costs can accumulate rapidly for high-throughput applications or those requiring cross-region API access.

Additionally, organizations often need to store conversation histories, training data, or model outputs, creating ongoing storage costs that compound over time. Implementing effective data lifecycle management and automated cost tracking can help control these expenses.

Fine-Tuning and Custom Model Costs

Custom model fine-tuning represents a significant hidden cost category that can dramatically exceed standard API usage fees. Fine-tuning typically requires substantial compute resources and data processing, with costs often ranging from hundreds to thousands of dollars per training session.

Organizations pursuing custom models must also consider ongoing maintenance, retraining, and version management costs that extend far beyond initial development expenses. These considerations require careful ROI analysis and long-term budget planning.

Integration and Development Overhead

The technical integration of LLM APIs into existing systems often requires significant development resources and ongoing maintenance that should be factored into total cost of ownership calculations. These soft costs can represent 2-3x the direct API usage fees for complex implementations.

Additionally, organizations may need to invest in specialized monitoring, security, and compliance tools to properly manage LLM integrations, particularly in regulated industries or enterprise environments with strict data governance requirements.

Cost Optimization Strategies

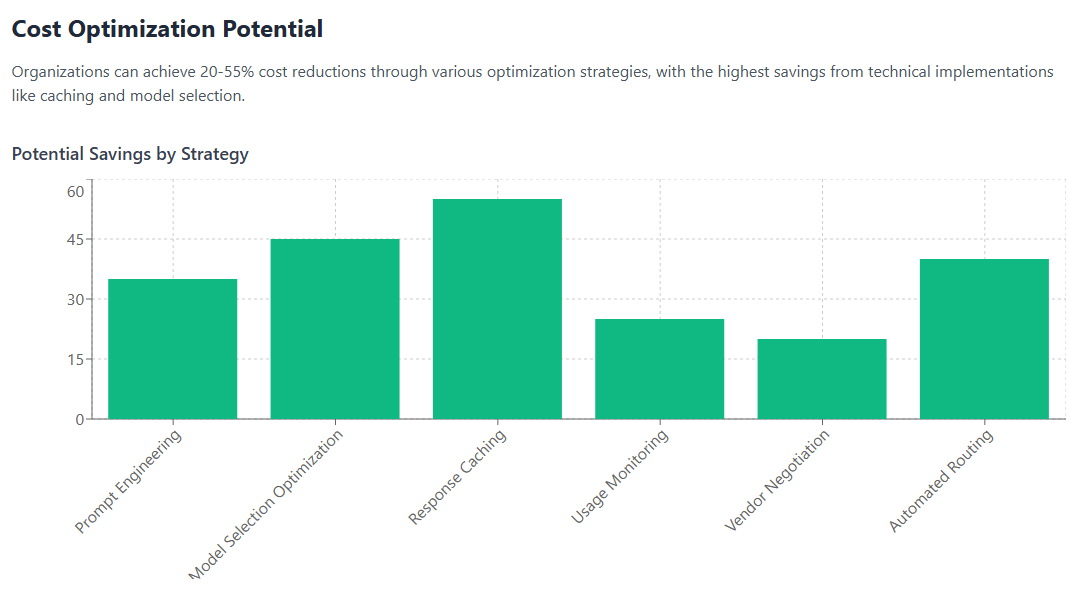

Effective LLM API cost optimization requires a multi-faceted approach that combines technical optimization, usage monitoring, and strategic vendor management. Organizations implementing comprehensive cost optimization strategies typically achieve 30-50% reductions in API-related expenses.

Prompt Engineering Optimization

Optimizing prompt design represents one of the most effective approaches to reducing LLM API costs while maintaining or improving output quality. Well-crafted prompts can significantly reduce token consumption by providing clearer instructions and minimizing the need for multiple iterations.

Advanced prompt engineering techniques include few-shot learning optimization, context compression, and output format specification that can reduce total token usage by 20-40%. Organizations should invest in prompt engineering best practices and automated optimization tools to maximize cost efficiency.

Model Selection and Routing

Implementing intelligent model selection based on task complexity can dramatically reduce costs by using less expensive models for simpler tasks while reserving premium models for complex requirements. This approach requires careful analysis of use case requirements and automated routing logic.

Many organizations implement model cascading strategies where simple requests are handled by cost-effective models, with complex or failed requests escalated to more powerful (and expensive) alternatives. This approach can reduce average costs by 25-50% while maintaining output quality.

Caching and Response Optimization

Implementing intelligent caching strategies can significantly reduce API costs by storing and reusing responses for similar queries. Advanced caching systems can achieve 30-60% cost reductions for applications with repeated or similar query patterns.

Response optimization techniques include output length limiting, format standardization, and result post-processing that can reduce token consumption while improving application performance. These strategies require careful balance between cost reduction and functionality preservation.

Examples of Good and Poor LLM API Spending Practices

Understanding real-world examples of effective and ineffective LLM API cost management helps organizations avoid common pitfalls while implementing proven optimization strategies.

Good LLM API Spending Practices

Comprehensive Usage Monitoring: Leading organizations implement detailed usage tracking and analytics that provide visibility into cost drivers, usage patterns, and optimization opportunities. This includes real-time monitoring dashboards and automated alerting for unusual spending patterns.

Strategic Vendor Management: Successful cost management includes regular vendor performance reviews, competitive pricing analysis, and strategic negotiations for enterprise agreements or volume discounts. Organizations with effective SaaS spend management practices typically achieve 15-25% better pricing through strategic vendor relationships.

Automated Cost Controls: Implementing automated spending limits, usage quotas, and approval workflows prevents runaway costs while maintaining operational flexibility. These controls should be coupled with intelligent alerting and escalation procedures.

Cross-Team Collaboration: Organizations achieve better cost outcomes through collaboration between development, operations, and finance teams, ensuring that technical optimization decisions align with business objectives and budget constraints.

Poor LLM API Spending Practices

Uncontrolled API Access: Allowing unrestricted API access without usage monitoring or approval workflows frequently results in unexpected cost overruns and budget surprises. This practice is particularly problematic in development and testing environments.

Ignoring Model Efficiency: Using premium models for all use cases without considering task-appropriate alternatives wastes resources and inflates costs unnecessarily. Many organizations achieve significant savings by implementing model selection optimization.

Lack of Usage Analytics: Operating without comprehensive usage analytics prevents organizations from identifying cost optimization opportunities and understanding spending patterns that drive budget planning decisions.

Poor Prompt Engineering: Inefficient prompts that require multiple iterations or generate excessive tokens can dramatically inflate costs while degrading user experience. Organizations should invest in prompt optimization and engineering best practices.

How to Monitor and Manage LLM API Costs

Effective LLM API cost management requires robust monitoring, analytics, and optimization tools that provide visibility into usage patterns and cost drivers while enabling proactive management of technology investments.

Centralized Cost Management Platforms

Organizations benefit significantly from centralized platforms that aggregate LLM API usage and costs across multiple providers and applications. These platforms should provide unified dashboards, automated reporting, and integration with existing financial management systems.

Modern cloud management tools for AI services include features specifically designed for LLM cost tracking, including token usage analytics, model performance comparisons, and automated optimization recommendations that help organizations achieve better cost outcomes.

Real-Time Usage Monitoring

Implementing real-time monitoring systems enables organizations to detect cost anomalies quickly and respond proactively to prevent budget overruns. These systems should include configurable alerting, automated reporting, and integration with incident management workflows.

Advanced monitoring platforms provide predictive analytics that forecast future costs based on current usage trends, enabling better budget planning and resource allocation decisions. This capability is particularly valuable for organizations with rapidly scaling AI implementations.

Automated Optimization Tools

Leading organizations implement automated tools that optimize LLM API usage in real-time, including intelligent model routing, prompt optimization, and response caching. These tools can achieve significant cost reductions while maintaining or improving application performance.

Automation tools should include machine learning capabilities that continuously improve optimization decisions based on historical performance data and changing usage patterns. This approach ensures that cost optimization strategies remain effective as applications evolve and scale.

Integration with Financial Systems

Effective cost management requires integration between LLM API monitoring tools and existing financial management systems, enabling accurate cost allocation, budgeting, and reporting that aligns with organizational financial processes.

Organizations using comprehensive SaaS management platforms achieve better cost control through unified visibility across all technology investments, enabling better decision-making and resource optimization across their entire technology portfolio.

Emerging Trends in LLM Pricing

The LLM API pricing landscape continues evolving rapidly, with several emerging trends that will significantly impact cost structures and optimization strategies in the coming years.

Usage-Based Pricing Evolution

Providers are developing more sophisticated usage-based pricing models that account for factors beyond simple token counts, including output quality, response time requirements, and specific model capabilities. These nuanced pricing structures offer better cost alignment with actual value delivered.

Advanced pricing models may include performance-based components where costs vary based on measurable output quality metrics, enabling organizations to optimize costs while maintaining service quality standards.

Subscription and Commitment Models

The market is seeing increased adoption of hybrid pricing models that combine subscription-based predictability with usage-based flexibility. These models help organizations balance cost predictability with operational flexibility while achieving volume-based discounts.

Enterprise commitment models are becoming more sophisticated, offering significant discounts for committed usage volumes while providing flexibility for seasonal or project-based demand variations.

Specialized Pricing for Vertical Solutions

Providers are introducing vertical-specific pricing models optimized for particular industries or use cases, similar to trends observed in broader cloud computing services. These specialized models can provide better cost efficiency for organizations with specific regulatory or operational requirements.

Industry-specific pricing often includes bundled services such as compliance tools, specialized security features, and domain-specific model optimizations that justify premium pricing while delivering enhanced value.

Conclusion

The LLM API pricing landscape in 2025 presents both tremendous opportunities and complex challenges for organizations seeking to leverage artificial intelligence capabilities cost-effectively. Understanding the nuanced pricing models, hidden costs, and optimization strategies outlined in this guide is essential for achieving successful AI implementations that deliver value while maintaining budget discipline.

Organizations that approach LLM API cost management strategically, implementing comprehensive monitoring, optimization tools, and vendor management practices, consistently achieve better outcomes than those that treat AI services as simple utility purchases. The investment in proper cost management infrastructure pays dividends through reduced expenses, improved predictability, and better alignment between AI investments and business objectives.

As the market continues maturing, we can expect further evolution in pricing models, optimization tools, and vendor offerings that will create new opportunities for cost-effective AI integration. Organizations that stay informed about these developments while maintaining robust cost management practices will be well-positioned to capitalize on the transformative potential of large language models while maintaining sustainable technology budgets.

The key to success lies in treating LLM API cost management as an ongoing strategic capability rather than a one-time optimization project. By implementing the strategies and best practices outlined in this guide, organizations can harness the power of artificial intelligence while maintaining the cost discipline necessary for long-term success in an increasingly AI-driven business environment.

Through careful planning, continuous optimization, and strategic vendor management, businesses of all sizes can access cutting-edge AI capabilities while maintaining predictable and sustainable technology investments that drive innovation and competitive advantage in the digital economy.