Large Language Models have emerged as transformative tools in the data analysis landscape, fundamentally changing how organizations process, interpret, and derive insights from their data. The integration of LLM for data analysis represents a paradigm shift from traditional statistical approaches to AI-powered analytics that can understand context, generate human-readable insights, and automate complex analytical workflows.

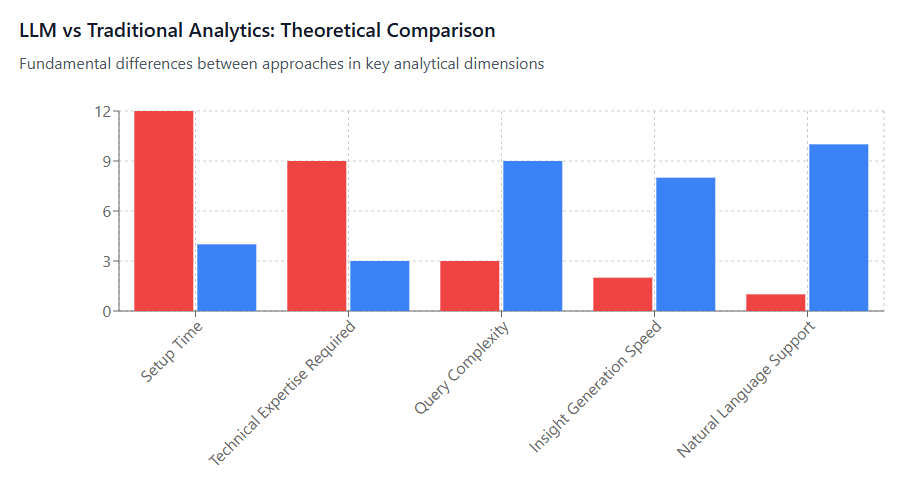

As businesses increasingly rely on data-driven decision-making, the demand for sophisticated analytics tools has grown exponentially. Traditional data analysis methods often require extensive technical expertise and time-consuming manual processes. LLMs address these challenges by providing natural language interfaces, automated pattern recognition, and intelligent summarization capabilities that make advanced analytics accessible to a broader range of users.

The significance of implementing LLM data science solutions extends beyond mere efficiency gains. Organizations that successfully leverage these technologies report improved decision-making speed, enhanced accuracy in predictive modeling, and substantial cost savings through automated processes. This guide provides comprehensive insights into selecting, implementing, and optimizing LLM solutions for data analysis workflows.

Understanding the current landscape of LLM analytics solutions is crucial for organizations seeking to maintain competitive advantages. From cost-effective cloud-based platforms to enterprise-grade on-premises deployments, the range of available options requires careful evaluation to ensure alignment with specific business requirements and budget constraints. Organizations should also consider implementing comprehensive cloud management strategies to maximize the effectiveness of their LLM deployments.

What are LLMs in Data Analysis?

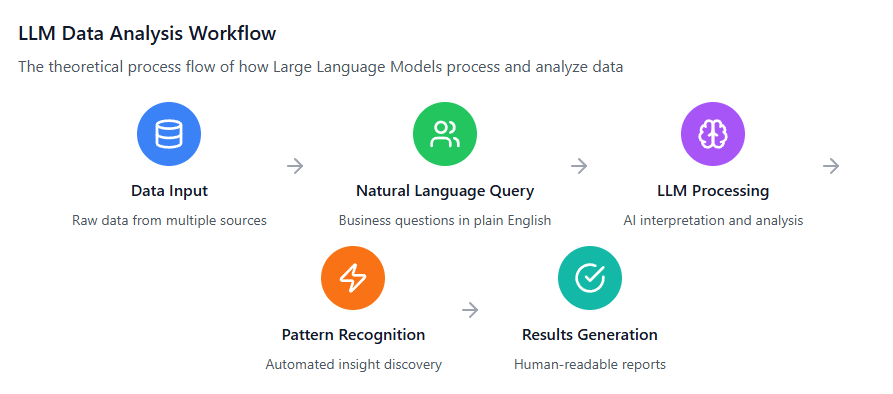

Large Language Models in data analysis represent advanced AI systems specifically designed to process, interpret, and generate insights from structured and unstructured data using natural language understanding capabilities. Unlike traditional analytics tools that require predefined queries and technical expertise, LLM analyst systems can understand complex business questions posed in natural language and provide comprehensive analytical responses.

The core functionality of LLM as data scientist tools encompasses several key areas: automated data exploration, pattern recognition, statistical analysis, predictive modeling, and insight generation. These systems leverage transformer architectures trained on vast datasets to understand relationships between different data points and generate meaningful interpretations that would typically require human expertise.

Modern LLM implementations for data analysis integrate seamlessly with existing business intelligence infrastructure, supporting various data formats including CSV files, databases, APIs, and real-time streaming data. The technology enables organizations to democratize data analysis by allowing non-technical stakeholders to interact with complex datasets using conversational interfaces.

Key Characteristics of LLM Data Analysis

Natural Language Processing: LLMs excel at understanding context within data queries, enabling users to ask complex questions without mastering SQL or programming languages. This capability significantly reduces the barrier to entry for data analysis across organizations.

Automated Pattern Recognition: Advanced algorithms identify trends, anomalies, and correlations within datasets that might be overlooked by traditional analysis methods. This automated discovery process accelerates insight generation and improves analytical accuracy.

Multi-modal Analysis: Modern LLM solutions support analysis across different data types, including text, numerical data, images, and time-series information, providing comprehensive analytical capabilities within a single platform.

Contextual Understanding: Unlike rule-based systems, LLMs understand the business context behind data requests, generating relevant insights that align with organizational objectives and industry-specific requirements.

LLM Capabilities for Data Analysis Tasks

The best LLM for data analysis solutions provide comprehensive capabilities that span the entire analytical workflow, from data preparation to insight presentation. Understanding these capabilities is essential for organizations evaluating LLM implementations for their data science initiatives.

Data Preprocessing and Cleaning

LLM systems excel at automated data preprocessing tasks, including missing value imputation, outlier detection, and data format standardization. These capabilities significantly reduce the time data scientists spend on preparatory work, allowing focus on higher-value analytical activities. Advanced LLM solutions can identify data quality issues and suggest appropriate remediation strategies based on dataset characteristics and business context.

The automation extends to data transformation tasks, where LLMs can understand business requirements and automatically generate appropriate data pipelines. This includes feature engineering, categorical variable encoding, and temporal data alignment – tasks that traditionally required extensive manual coding and domain expertise.

Exploratory Data Analysis

Modern LLM analytics platforms provide sophisticated exploratory data analysis capabilities that go beyond traditional statistical summaries. These systems can automatically generate comprehensive data profiles, identify interesting patterns, and suggest relevant visualizations based on data characteristics and analytical objectives.

The natural language interface allows analysts to explore data intuitively, asking questions like “What are the main factors driving customer churn?” or “How has revenue performance varied across different market segments?” The LLM processes these queries, performs appropriate statistical analyses, and presents findings in easily understandable formats.

Predictive Modeling and Machine Learning

LLM-powered analytics platforms incorporate automated machine learning (AutoML) capabilities that can select appropriate algorithms, optimize hyperparameters, and validate model performance without extensive manual intervention. This democratization of machine learning enables organizations to deploy predictive models across various business functions. When implementing these solutions, organizations should evaluate native tools versus third-party alternatives to determine the optimal integration approach.

The integration of natural language understanding with machine learning workflows allows for more interpretable models. LLMs can explain model predictions in business terms, identify key feature importance, and provide actionable recommendations based on predictive insights.

Advanced Analytics and Statistical Inference

LLM data science solutions provide sophisticated statistical analysis capabilities, including hypothesis testing, regression analysis, time series forecasting, and causal inference. These tools can automatically select appropriate statistical methods based on data characteristics and research questions.

The natural language explanation capabilities ensure that statistical results are presented in accessible formats, making complex analytical findings understandable to business stakeholders without statistical backgrounds. This bridges the communication gap between technical teams and business decision-makers.

Best LLM Solutions for Analytics

Selecting the best LLM for data analysis requires careful evaluation of various factors including performance capabilities, cost structure, integration options, and scalability requirements. The current market offers diverse solutions ranging from cloud-based services to on-premises deployments, each with distinct advantages and limitations.

OpenAI GPT-4 and Analytics APIs

OpenAI’s GPT-4 represents one of the most capable LLM solutions for data analysis applications, offering advanced natural language understanding and generation capabilities. The platform provides robust APIs for integrating analytical workflows with existing business systems, supporting both real-time and batch processing requirements.

The GPT-4 architecture excels at complex reasoning tasks, making it particularly suitable for advanced analytics scenarios requiring multi-step logical inference. Organizations using GPT-4 for data analysis report significant improvements in analytical accuracy and insight quality, particularly for unstructured data analysis and natural language report generation.

Key Advantages:

- Superior natural language understanding and generation

- Extensive API ecosystem for integration

- Strong performance on complex reasoning tasks

- Regular model updates and improvements

Considerations:

- Higher cost per token compared to alternatives

- API dependency requires reliable internet connectivity

- Data privacy considerations for sensitive information

Google Cloud Vertex AI and BigQuery ML

Google’s Vertex AI platform combined with BigQuery ML provides comprehensive LLM analytics capabilities optimized for large-scale data processing. The platform offers seamless integration with Google’s cloud infrastructure, enabling efficient processing of massive datasets while maintaining cost-effectiveness.

The BigQuery ML integration allows organizations to perform machine learning directly within their data warehouse, reducing data movement costs and improving processing efficiency. The platform’s auto-scaling capabilities ensure optimal resource utilization across varying workload demands.

Key Advantages:

- Integrated cloud analytics ecosystem

- Optimized for large-scale data processing

- Cost-effective pricing for high-volume workloads

- Strong integration with Google Workspace tools

Microsoft Azure OpenAI and Cognitive Services

Microsoft’s Azure OpenAI service provides enterprise-grade LLM as data scientist capabilities with enhanced security and compliance features. The platform offers hybrid deployment options, allowing organizations to balance performance, cost, and data governance requirements.

The integration with Microsoft’s broader ecosystem, including Power BI and Office 365, creates seamless analytical workflows that extend from data processing to business presentation. This comprehensive integration reduces implementation complexity and improves user adoption rates. Organizations can further optimize their Microsoft ecosystem deployments by following best practices for Office 365 cost optimization.

Key Advantages:

- Enterprise security and compliance features

- Hybrid deployment options

- Strong integration with Microsoft ecosystem

- Comprehensive support and documentation

Open-Source Alternatives

Several open-source LLM solutions provide cost-effective alternatives for organizations with specific technical requirements or budget constraints. Platforms like Hugging Face Transformers, LangChain, and locally-hosted models offer significant customization capabilities while maintaining cost control.

Open-source solutions are particularly attractive for organizations with strong technical capabilities and specific customization requirements. These platforms enable fine-tuning for domain-specific applications and provide complete control over data processing and model deployment.

Cost Comparison of LLM Analytics Solutions

Understanding the cost implications of different LLM for data analysis solutions is crucial for making informed implementation decisions. The total cost of ownership includes not only direct usage fees but also infrastructure costs, integration expenses, and ongoing maintenance requirements.

Cloud-Based Pricing Models

Usage-Based Pricing: Most cloud LLM services employ token-based pricing models where costs scale directly with usage volume. OpenAI’s GPT-4 pricing ranges from $0.01 to $0.12 per 1K tokens depending on input/output ratios and model variants. For typical data analysis workloads processing 100,000 queries monthly, costs can range from $500 to $3,000 per month.

Subscription Models: Some providers offer flat-rate subscription models that provide predictable costs for high-volume users. Google Cloud’s Vertex AI offers committed use discounts that can reduce costs by up to 57% for sustained workloads. Microsoft Azure provides similar enterprise agreements with volume-based pricing tiers.

Infrastructure and Integration Costs

Implementing LLM analytics solutions requires consideration of additional infrastructure costs including data storage, network bandwidth, and integration development. Cloud-based solutions typically include these costs in their pricing models, while on-premises deployments require separate infrastructure investments.

Data Transfer Costs: Organizations processing large datasets should account for data transfer costs between systems. Cloud providers typically charge $0.09 per GB for data egress, which can accumulate significantly for data-intensive analytics workloads. Implementing effective cloud cost optimization practices can help minimize these expenses.

Integration Development: Custom integration development costs vary widely based on complexity and existing system architecture. Simple API integrations might cost $10,000-$25,000, while comprehensive enterprise integrations can require $100,000+ investments.

Total Cost of Ownership Analysis

A comprehensive TCO analysis for LLM data science implementations should include direct usage costs, infrastructure expenses, personnel training, and ongoing maintenance requirements. Organizations typically see break-even points within 12-18 months for well-implemented solutions. Understanding FinOps principles is essential for effective cost management throughout the implementation lifecycle.

Cost Categories:

- Direct LLM usage fees: 40-60% of total costs

- Infrastructure and integration: 20-30%

- Training and change management: 10-15%

- Ongoing maintenance and optimization: 10-15%

Cost Optimization Strategies

Effective cost management for LLM analytics implementations requires proactive optimization strategies including usage monitoring, model selection optimization, and efficient data pipeline design. Organizations implementing these strategies typically achieve 25-40% cost reductions compared to baseline implementations.

Caching and Preprocessing: Implementing intelligent caching mechanisms for common queries and preprocessing data to reduce token consumption can significantly reduce operational costs. Organizations report 20-35% cost savings through effective caching strategies. Additionally, detecting cost anomalies early can prevent unexpected expense spikes.

Model Right-Sizing: Selecting appropriate model variants for specific use cases ensures optimal cost-performance balance. Using smaller models for routine tasks and reserving larger models for complex analysis can reduce costs by 30-50%.

Implementation Strategies and Best Practices

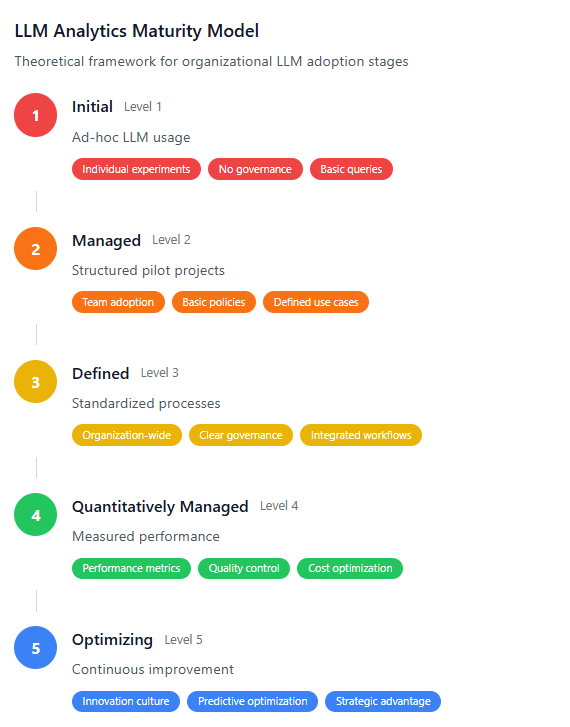

Successful implementation of LLM for data analysis requires careful planning, phased deployment, and comprehensive change management strategies. Organizations that follow structured implementation approaches achieve better outcomes and faster return on investment.

Planning and Assessment Phase

The initial planning phase should include comprehensive assessment of existing data infrastructure, analytical requirements, and organizational readiness for LLM adoption. This assessment guides technology selection, implementation timeline, and resource allocation decisions.

Data Readiness Assessment: Evaluate data quality, accessibility, and governance frameworks to ensure compatibility with LLM requirements. Organizations with mature data governance practices typically achieve 40% faster implementation timelines and better analytical outcomes.

Use Case Prioritization: Identify high-value use cases that align with business objectives and demonstrate clear ROI potential. Starting with well-defined, measurable use cases builds organizational confidence and supports broader adoption.

Pilot Implementation

Pilot implementations provide valuable learning opportunities while minimizing risk exposure. Successful pilot projects typically focus on specific business functions or analytical workflows, allowing teams to develop expertise and refine processes before broader deployment.

Success Metrics: Define clear success metrics including accuracy improvements, time savings, and user satisfaction scores. Pilot projects should demonstrate measurable value within 90 days to maintain organizational support and momentum.

User Training: Comprehensive training programs ensure effective utilization of LLM analyst capabilities. Organizations investing in structured training programs achieve 60% higher user adoption rates and better analytical outcomes.

Scaling and Optimization

Scaling successful pilot implementations requires careful attention to performance optimization, cost management, and user experience consistency. Organizations should develop standard operating procedures and governance frameworks to maintain quality as usage scales.

Performance Monitoring: Implement comprehensive monitoring systems to track system performance, accuracy metrics, and user satisfaction. Regular monitoring enables proactive optimization and ensures consistent analytical quality.

Governance Framework: Establish clear governance policies for LLM usage including data access controls, quality standards, and ethical guidelines. Strong governance frameworks reduce risks and ensure compliant operations.

Integration with Existing Analytics Tools

Effective integration of LLM analytics capabilities with existing business intelligence and analytics infrastructure is critical for maximizing value and ensuring user adoption. Organizations should evaluate integration options based on technical requirements, user workflows, and long-term strategic objectives.

Business Intelligence Platform Integration

Modern BI platforms increasingly offer native LLM integration capabilities, enabling seamless incorporation of AI-powered analytics into existing dashboards and reporting workflows. Platforms like Tableau, Power BI, and Qlik Sense provide APIs and plugins for LLM integration.

Dashboard Enhancement: LLM integration enables natural language querying within existing dashboards, allowing business users to explore data conversationally without technical expertise. This capability significantly improves user engagement and analytical exploration.

Automated Insights: LLM-powered automated insight generation can enhance static reports with dynamic explanations, trend analysis, and predictive indicators. Organizations report 50% improvement in report comprehension and actionability through automated insight integration.

Data Pipeline Integration

Integrating LLM data science capabilities into existing data pipelines enables automated data quality assessment, pattern detection, and anomaly identification. This integration improves data reliability and accelerates insight discovery across analytical workflows.

Real-time Processing: Stream processing frameworks like Apache Kafka and Apache Spark can incorporate LLM capabilities for real-time data analysis and decision support. This integration enables immediate response to changing business conditions and emerging opportunities.

Batch Processing: ETL/ELT pipelines can leverage LLM capabilities for automated data profiling, quality assessment, and transformation logic generation. This automation reduces manual effort and improves data pipeline reliability.

API and Microservices Architecture

Modern organizations increasingly adopt API-first approaches for LLM integration, enabling flexible deployment across diverse applications and use cases. RESTful APIs and microservices architectures provide scalable integration options that support evolving business requirements.

Custom Applications: Organizations can develop custom analytical applications that leverage LLM as data scientist capabilities through well-designed APIs. This approach enables highly tailored solutions that align precisely with specific business processes.

Third-party Integrations: Standard API interfaces enable integration with third-party applications and vendor solutions, creating comprehensive analytical ecosystems that leverage best-of-breed capabilities across different functional areas.

ROI Case Studies from Data Science Teams

Real-world implementations of LLM for data analysis demonstrate significant return on investment across diverse industries and use cases. These case studies provide valuable insights into implementation strategies, success factors, and quantifiable business outcomes.

Financial Services: Automated Risk Analysis

A major investment bank implemented LLM-powered risk analysis capabilities that automated routine risk assessment tasks while improving analytical accuracy. The implementation processed over 10,000 risk scenarios daily, reducing analysis time from hours to minutes while improving prediction accuracy by 23%.

Implementation Details: The organization deployed a hybrid solution combining OpenAI GPT-4 for natural language processing with internal machine learning models for quantitative analysis. The system integrated with existing risk management platforms through RESTful APIs.

Results Achieved:

- 75% reduction in routine analysis time

- 23% improvement in risk prediction accuracy

- $2.3M annual cost savings through automation

- 40% increase in analytical coverage across portfolios

Lessons Learned: Success required significant investment in data quality improvement and analyst training. Organizations should expect 6-12 months for full implementation and user adoption.

Healthcare: Clinical Data Analysis

A healthcare research organization implemented LLM analytics for clinical trial data analysis, automating patient cohort identification and treatment outcome analysis. The system processed electronic health records and clinical trial data to identify potential participants and predict treatment responses.

Technical Implementation: The solution combined Azure OpenAI services with specialized medical NLP models, integrating with existing clinical data management systems through HL7 FHIR APIs.

Quantifiable Outcomes:

- 60% faster patient cohort identification

- 35% improvement in clinical trial enrollment rates

- $1.8M reduction in trial operational costs

- 90% accuracy in automated eligibility screening

Retail: Customer Behavior Analysis

A multinational retailer deployed LLM-powered customer analytics to enhance personalization and inventory optimization. The system analyzed customer transaction data, social media interactions, and market trends to generate actionable business insights.

Business Impact:

- 28% increase in personalized marketing effectiveness

- 15% improvement in inventory turnover rates

- $4.2M annual revenue increase through optimized recommendations

- 45% reduction in out-of-stock incidents

Implementation Strategy: The organization adopted a phased approach, starting with customer segmentation analysis before expanding to predictive inventory management and personalized marketing optimization.

Manufacturing: Predictive Maintenance

An automotive manufacturer implemented LLM data science capabilities for predictive maintenance analysis, processing sensor data from manufacturing equipment to predict failures and optimize maintenance schedules.

Technical Approach: The solution integrated IoT sensor data with maintenance records and operational documentation, using LLMs to interpret unstructured maintenance notes and correlate them with sensor patterns.

Operational Results:

- 42% reduction in unplanned equipment downtime

- 30% decrease in maintenance costs

- $3.1M annual savings through optimized maintenance scheduling

- 85% accuracy in failure prediction models

Cost Optimization Strategies

Implementing effective cost optimization strategies for LLM for data analysis deployments ensures sustainable operations while maximizing return on investment. Organizations can achieve significant cost reductions through strategic optimization approaches.

Usage Optimization Techniques

Query Optimization: Implementing intelligent query preprocessing and result caching can reduce LLM API calls by 30-50%. Organizations should analyze query patterns to identify optimization opportunities and implement automated preprocessing pipelines.

Model Selection Strategy: Using appropriate model sizes for specific tasks optimizes cost-performance balance. Routing simple queries to smaller models while reserving larger models for complex analysis can reduce costs by 40% without impacting analytical quality.

Batch Processing: Aggregating individual queries into batch requests reduces API overhead and improves cost efficiency. Organizations processing high query volumes can achieve 20-30% cost savings through effective batching strategies.

Infrastructure Optimization

Hybrid Deployment Models: Combining cloud-based LLM services with on-premises preprocessing can optimize costs for large-scale deployments. This approach keeps sensitive data internal while leveraging cloud capabilities for compute-intensive tasks.

Resource Right-Sizing: Continuously monitoring and adjusting compute resources ensures optimal cost utilization. Organizations should implement automated scaling policies that match resource allocation to actual demand patterns.

Data Pipeline Efficiency: Optimizing data preprocessing and pipeline efficiency reduces overall computational requirements. Effective pipeline design can achieve 25-35% cost reductions while improving processing speed. Organizations should consider cloud tagging strategies to better track and allocate costs across different analytical workloads.

Governance and Monitoring

Usage Governance: Implementing usage policies and approval workflows prevents uncontrolled cost escalation while maintaining analytical capabilities. Organizations should establish clear guidelines for LLM usage across different business functions.

Cost Monitoring: Comprehensive cost monitoring and alerting systems enable proactive cost management. Organizations should implement real-time cost tracking with automated alerts for unusual usage patterns.

Performance Optimization: Regular performance reviews and optimization cycles ensure continued cost-effectiveness as usage patterns evolve. Organizations should conduct quarterly optimization reviews to identify new cost reduction opportunities.

Future Trends in LLM Analytics

The evolution of LLM analytics continues to accelerate, with emerging trends promising to further transform how organizations approach data analysis and decision-making. Understanding these trends helps organizations prepare for future opportunities and challenges.

Specialized Domain Models

The development of industry-specific LLM models optimized for particular domains will improve analytical accuracy while reducing costs. These specialized models will incorporate domain expertise and terminology, providing more relevant insights for specific industries.

Vertical Integration: Industry-specific LLM solutions will offer pre-built analytical capabilities tailored to sector requirements, reducing implementation complexity and improving time-to-value for organizations.

Multimodal Analytics Integration

Future LLM data science platforms will seamlessly integrate multiple data modalities including text, images, audio, and sensor data within unified analytical workflows. This integration will enable more comprehensive analysis and richer insights.

Edge Computing Integration: The combination of edge computing with LLM capabilities will enable real-time analytics at data sources, reducing latency and improving decision-making speed for time-critical applications.

Automated Model Development

Advanced AutoML capabilities powered by LLMs will automate the entire machine learning pipeline from data preparation through model deployment and monitoring. This automation will further democratize data science capabilities across organizations.

Continuous Learning: Future systems will implement continuous learning capabilities that automatically adapt to changing data patterns and business requirements without manual intervention.

Conclusion

The integration of LLM for data analysis represents a fundamental shift in how organizations approach analytical workflows, offering unprecedented opportunities for automation, insight generation, and decision-making enhancement. Organizations that successfully implement these technologies achieve significant competitive advantages through improved analytical capabilities, reduced operational costs, and accelerated insight discovery.

The selection of appropriate LLM analytics solutions requires careful consideration of technical requirements, cost constraints, and organizational objectives. Organizations should evaluate solutions based on analytical capabilities, integration requirements, cost structure, and long-term strategic alignment. The most successful implementations combine advanced technical capabilities with comprehensive change management strategies and strong governance frameworks.

Cost optimization remains a critical success factor for sustainable LLM analytics deployments. Organizations can achieve significant cost reductions through strategic optimization approaches including usage optimization, infrastructure right-sizing, and effective governance policies. The total cost of ownership analysis should encompass direct usage costs, infrastructure requirements, integration expenses, and ongoing maintenance needs.

The future of LLM data science promises continued innovation with specialized domain models, multimodal analytics integration, and automated model development capabilities. Organizations that establish strong foundations today will be well-positioned to leverage these emerging capabilities as they become available.

Through careful planning, strategic implementation, and continuous optimization, organizations can harness the transformative power of LLM as data scientist capabilities to drive business value, improve decision-making, and maintain competitive advantages in increasingly data-driven markets. The key to success lies in balancing technical capabilities with business objectives while maintaining focus on measurable outcomes and sustainable operations.

As the landscape continues to evolve, organizations must remain adaptive and committed to continuous learning and optimization. The investment in LLM analytics capabilities today establishes the foundation for future innovation and competitive advantage in the data-driven economy.