Introduction

In 2025, the use of Large Language Models (LLMs) has become mainstream across industries. From marketing and customer support automation to data analytics and software development, enterprises are embedding AI into nearly every workflow.

But while LLMs drive innovation, they also introduce a new and often invisible problem — uncontrolled AI spending. Each API call, token processed, or model fine-tuned adds to cloud bills that can grow exponentially without proper management.

This is why LLM cost management is no longer optional. Much like SaaS spend management and cloud cost optimization, tracking and optimizing AI expenses has become a key component of FinOps strategies.

With solutions like Binadox LLM Cost Tracker, organizations can finally visualize, analyze, and control the costs of AI models such as OpenAI GPT or Azure OpenAI, ensuring transparency and sustainable growth.

Understanding LLM Costs

To understand why cost management is crucial, it’s important to break down what actually drives LLM expenses.

LLM costs typically include:

- Compute costs – The processing power used for inference, fine-tuning, and large-scale training.

- Storage and data transfer – Hosting datasets, logs, and generated outputs.

- API usage – Fees for every token input and output when interacting with third-party providers.

- Integration costs – SaaS and cloud infrastructure used to support AI-based operations.

Each token — whether input or output — carries a price tag. When multiplied across millions of queries per month, costs can quickly escalate.

For example, one department may be using OpenAI’s GPT-4 for content generation, another may run analytics queries on Azure OpenAI, while customer support teams integrate AI chatbots. Without a centralized view, the company may not even realize how fragmented and expensive its AI usage has become.

This is exactly where Binadox’s LLM Cost Tracker comes in — providing real-time visibility into total tokens, spending trends, and potential savings across multiple providers.

Why LLM Cost Management Matters in 2025

1. The Explosion of AI Adoption

The number of organizations integrating AI into their operations is growing exponentially. According to market projections, enterprise spending on LLM-based tools is expected to increase by more than 40% annually through 2026.

This rapid growth mirrors what happened in the SaaS era — a boom in adoption followed by hidden costs, overlapping subscriptions, and lack of governance. LLMs are following the same trajectory, but with even higher financial stakes.

2. Unpredictable Usage and Billing

Unlike traditional software or even SaaS, LLMs use consumption-based pricing models. Costs depend entirely on how many tokens your teams generate.

Without monitoring, these usage patterns can create massive budget overruns. For example, developers testing prompts or users automating content generation might consume millions of tokens overnight.

Binadox LLM Cost Tracker solves this by visualizing your cost trend over time and letting you set budget thresholds to alert teams when spending approaches defined limits.

3. Multi-Cloud and SaaS Integration

Most organizations don’t rely on just one AI vendor. They use OpenAI, Azure OpenAI, Google Vertex, or Anthropic, often connected to SaaS tools like Slack, Salesforce, or Notion. Each integration generates its own bill.

The result? Fragmented data, complex invoices, and no clear visibility into total AI spending.

With Binadox, you can track and consolidate all AI-related costs in one dashboard — alongside your SaaS and cloud expenses — for full financial transparency.

4. Compliance and Governance

Beyond finances, AI models process large volumes of sensitive data. Managing who uses which model, how data is stored, and how much each operation costs is essential for compliance, accountability, and security.

Effective LLM cost management ensures not only financial efficiency but also operational transparency — aligning with broader corporate governance standards.

Typical LLM Cost Challenges

Even companies that monitor their cloud and SaaS spending often struggle with AI visibility. Here are some of the most common challenges organizations face today:

| Challenge | Description | Example | Binadox Solution |

|---|---|---|---|

| Shadow AI Usage | Teams use AI tools without IT or finance approval. | Developers or marketing teams connect directly to GPT APIs. | Centralized dashboard and connection management. |

| Untracked API Costs | Usage spikes go unnoticed until invoices arrive. | Token spending doubles over a weekend due to automation. | Budget alerts and anomaly detection. |

| Provider Fragmentation | Costs spread across multiple AI vendors. | Separate accounts for OpenAI, Azure, and ChatGPT. | Unified cost overview by provider and account. |

| Forecasting Difficulty | AI costs are hard to predict month to month. | Token output fluctuates with user behavior. | Cost trend visualization and predictive insights. |

Managing LLM costs effectively means not only tracking them but understanding why they happen — and empowering decision-makers to act on that information.

Key Features of Binadox LLM Cost Tracker

The Binadox LLM Cost Tracker provides organizations with the tools to gain full visibility, detect inefficiencies, and take actionable steps to optimize AI spending. The platform is designed for FinOps, cloud management, and data teams seeking better control over model-related expenses.

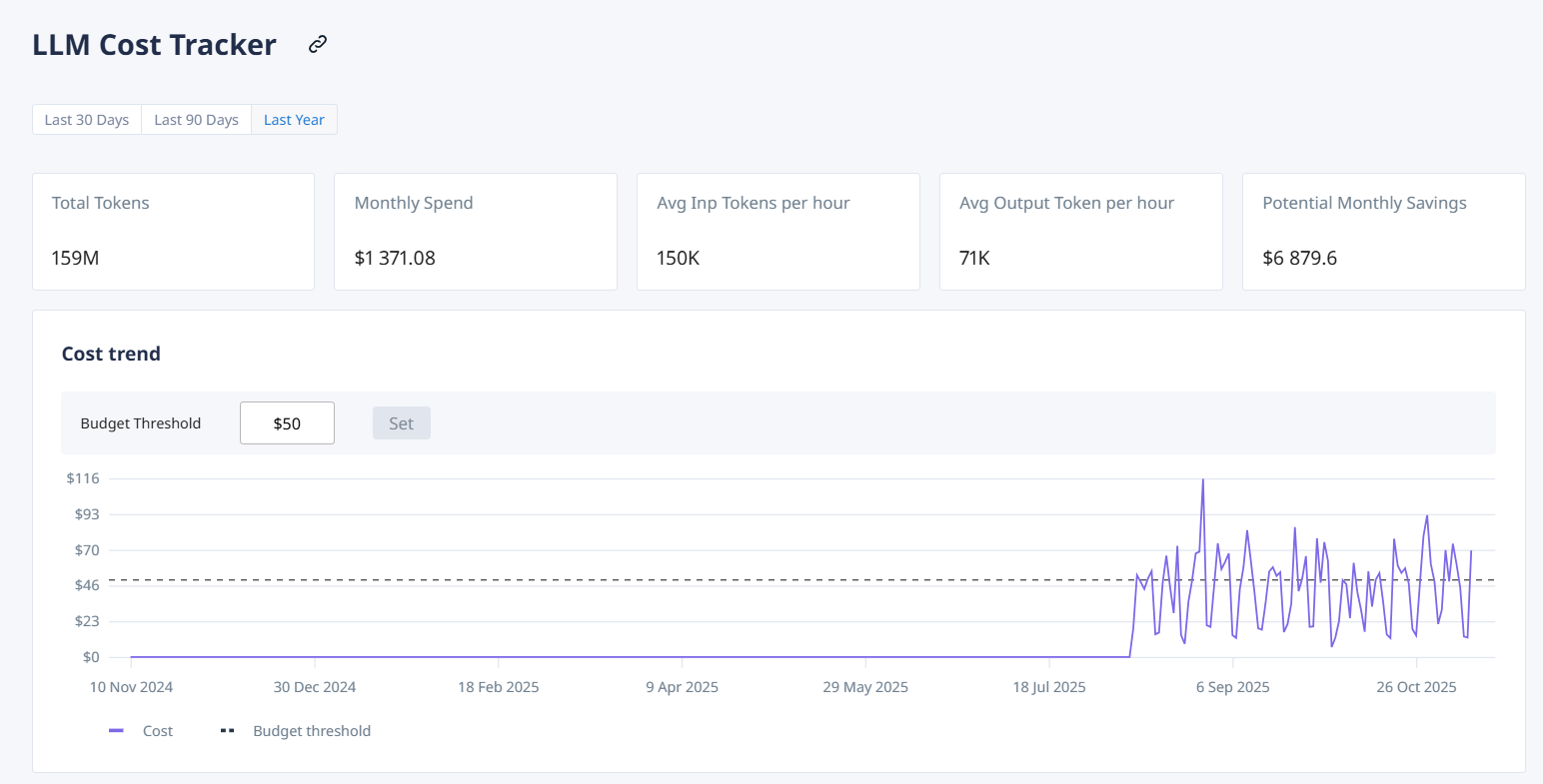

Centralized Dashboard

Binadox brings all LLM providers into a single interface — from OpenAI and Azure OpenAI to ChatGPT integrations.

At a glance, users can view:

- Total tokens processed (input + output)

- Monthly spending trends

- Average tokens per hour

- Potential monthly savings

This unified overview helps teams identify where their AI budgets are being consumed most heavily and which workloads can be optimized.

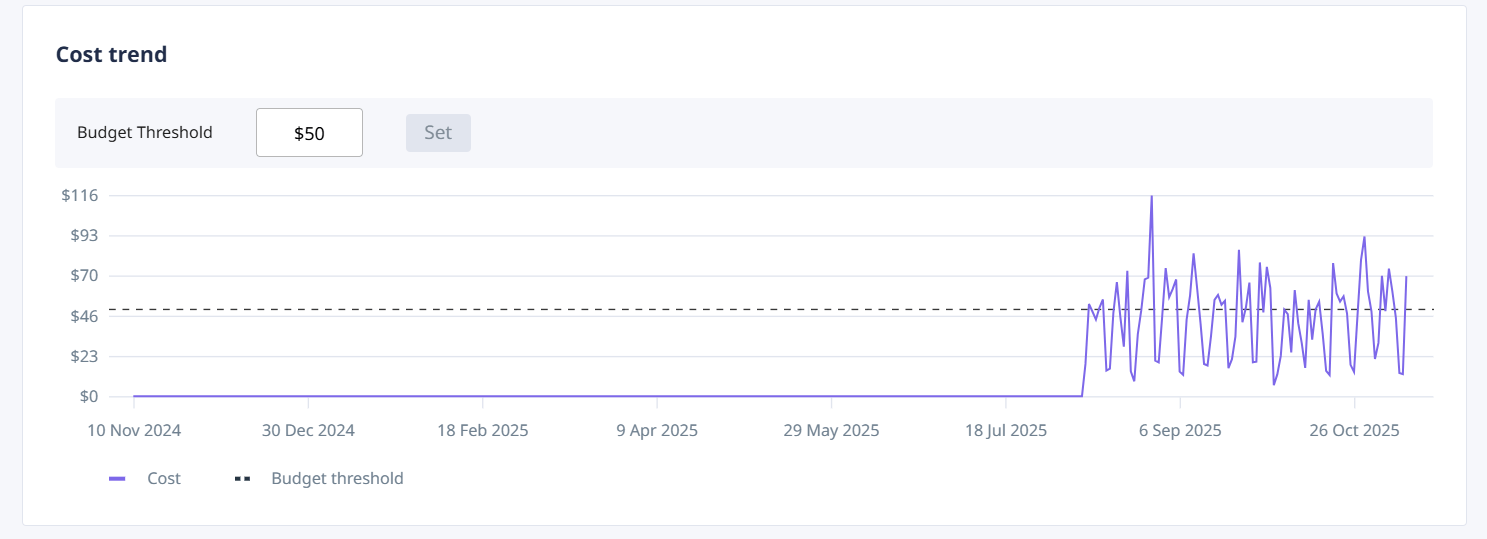

Cost Trend Visualization

The Cost Trend graph provides a dynamic visualization of how AI expenses evolve over time.

Teams can set custom budget thresholds, like $50 or $500 per project, and instantly detect when costs approach or exceed defined limits.

This proactive approach helps prevent billing surprises and supports real-time budget governance.

The trend line can also reveal usage spikes that correlate with product launches, data migrations, or automation tests — giving FinOps managers actionable insights to forecast future AI demand.

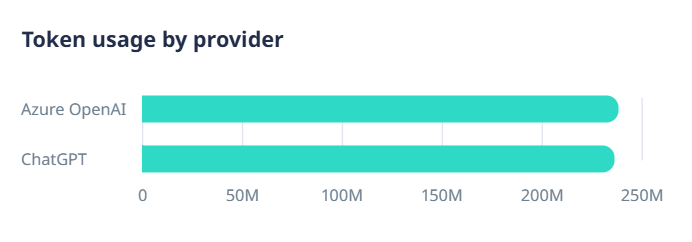

Token Usage by Provider

With multi-vendor AI strategies becoming standard, it’s often unclear which provider contributes most to total expenses.

The Token Usage by Provider widget breaks down consumption between platforms such as Azure OpenAI and ChatGPT.

This comparison enables informed vendor management decisions — for instance, shifting workloads to the provider with a better cost-performance ratio.

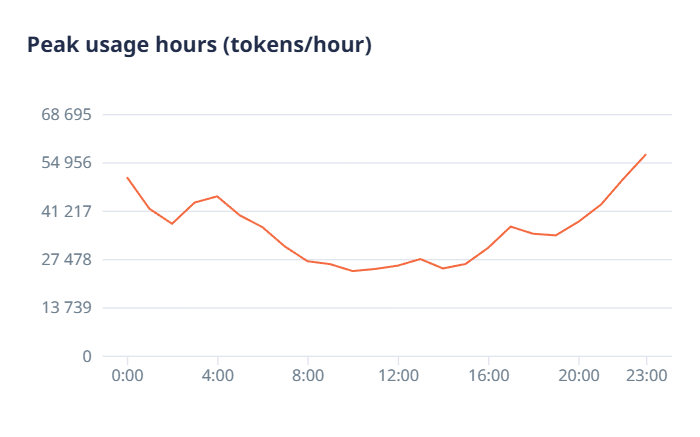

Peak Usage Hours

Understanding when your LLMs are used most heavily is key to reducing operational costs.

The Peak Usage Hours chart shows activity patterns by hour, highlighting when token usage (and therefore cost) reaches its highest levels.

Teams can then schedule workloads more efficiently — for example, running batch processes or non-critical tasks during off-peak hours to balance system load and spending.

Spend Analysis by Account

The Spend Analysis by Account table aggregates all financial and usage data across connected accounts.

Each entry displays:

- Number of requests

- Tokens processed

- Total cost

- Active period

For example:

- Demo Azure OpenAI: 121,931 requests, $3,609.49 spent

- Demo ChatGPT: 119,300 requests, $552.54 spent

This level of granularity allows organizations to benchmark usage, assign accountability by department, and identify which integrations deliver the most value per dollar.

Best Practices for LLM Cost Optimization

Optimizing LLM expenses requires both visibility and strategic action. Here are key practices that can significantly reduce waste and improve budget predictability.

Set Budget Thresholds and Alerts: Start by defining budget limits at the project or provider level. With Binadox, teams can set automated alerts to notify them when spending crosses predefined thresholds – ensuring costs remain under control before they escalate.

Analyze Token Distribution: Regularly review which providers and models consume the most tokens. If one account’s usage doubles without a corresponding increase in productivity, it may indicate overuse, inefficiency, or misconfiguration.

Automate Cost Monitoring: Instead of manual oversight, leverage automation. Binadox automation rules can trigger actions (like pausing integrations or notifying admins) when anomalies occur.

Evaluate Peak Usage Patterns: Adjust workloads to avoid peak periods identified in your usage graph. Moving computationally heavy operations to off-hours can cut costs without affecting user experience.

Rightsize Model Selection: Use lighter models (e.g., GPT-4-mini or smaller fine-tuned models) for basic tasks, reserving premium versions for advanced reasoning or critical business operations. Rightsizing is as crucial in AI as it is in cloud instance management – it’s about paying only for what you truly need.

Conclusion

The rise of LLMs marks a new era of digital transformation – and with it, a new financial challenge.

LLM cost management has emerged as a mission-critical practice for organizations that want to innovate responsibly while maintaining budget discipline.

With Binadox LLM Cost Tracker, teams can:

- Monitor AI usage across all providers

- Detect anomalies and overspending instantly

- Set budget thresholds and alerts

- Generate unified reports and actionable insights

By combining AI, SaaS, and cloud cost visibility, Binadox gives businesses complete control over their digital spending – ensuring efficiency, scalability, and long-term profitability.